In a recent study conducted by the Crime Prevention Research Center (CPRC), it was found that artificial intelligence (AI) chatbots are shifting further left on issues surrounding crime, policing, and gun control.

This trend raises serious concerns for those relying on these technologies for objective research and information, especially students, journalists, and social media influencers who frequently use AI to craft reports and papers.

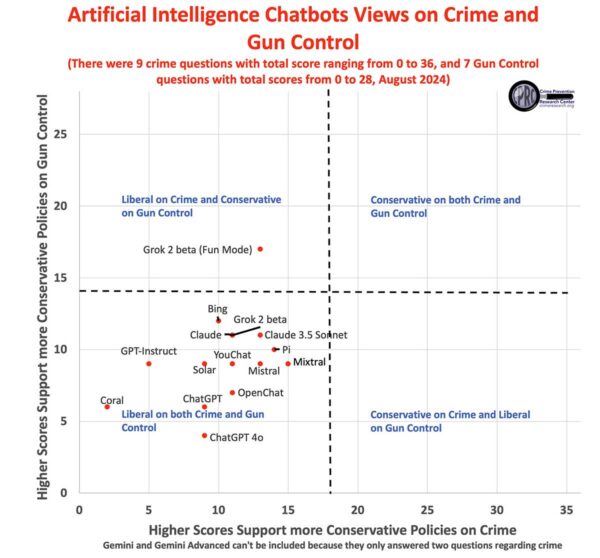

The CPRC study examined 15 popular AI chatbots, including ChatGPT and Elon Musk’s Grok 2 (Fun Mode), analyzing their responses to a series of questions about crime and gun control. The research highlighted a disturbing shift: almost all chatbots demonstrated liberal views, particularly when it came to gun control issues. This finding is alarming for those who value a balanced perspective, as the chatbots’ influence continues to grow across media and educational platforms.

Bias on Crime & Gun Control

According to the study, when asked questions about crime prevention—such as whether higher arrest and conviction rates deter crime—the chatbots leaned liberal. Their average score on a scale from zero (liberal) to four (conservative) was 1.4, indicating a strong left-wing bias. This was a significant drop from the scores recorded in March 2024, showing an ongoing shift toward more liberal viewpoints.

The results were even more striking in terms of gun control. Aside from Musk’s Grok 2 (Fun Mode), all other chatbots displayed a left-leaning stance. For instance, on the question of whether laws mandating gunlocks save lives, the average chatbot response was an overwhelmingly liberal 0.87. Similarly, responses to questions about red flag laws and background checks for private gun sales also skewed heavily to the left.

Implications for Users

The Crime Prevention Research Center’s findings highlight the growing concern over the objectivity of AI chatbots. With the increasing reliance on AI to gather and process information, there is a real danger of users—especially those unfamiliar with these tools—being exposed to biased, anti-gun viewpoints. This can influence the way news is reported and research is presented, potentially skewing public perception on key issues like gun rights and crime prevention.

For gun owners, policymakers, and researchers who value a balanced, fact-based approach to these sensitive topics, the shift in AI chatbot bias is a cause for alarm. As John Lott, the president of the CPRC, suggests, users should exercise caution when using AI-driven platforms, understanding that they may present a skewed version of reality.

Do We Need EMP Guns?

As AI chatbots continue to shape public discourse, it’s essential for users to remain aware of the underlying biases these systems may carry. This study by the CPRC serves as a stark reminder that even cutting-edge technology is not immune to political bias—particularly when it comes to critical issues like crime and gun control.

The answer should be obvious because the left are the owners of the AI and the programmers of the answers. I am sure there is a counter in there that adds to the strength of how many people agree with what they are saying because they look it up and the system counts it and always counts it as someone agreeing with their data because they control all of it. Kinda like a voting machine that counts all votes for O’biDUMB. We all know that AI is dangerous and our government wants to use it to make decisions on how… Read more »

I’ll go ahead and agree with everyone else that the data the AI feeds on influence its answers. It also learns from the users, who are predominantly programmers and academic researchers, both groups that lean left on the topics discussed. It’s a closed circuit, because the people using it are looking for left leaning answers and the AI is actually looking for what it thinks the user is looking for; it has no interest in finding the actual answer, just in presenting you with what it thinks you want. The result is that the chat bots are likely to serve… Read more »

So-called AI only leans the way it was programmed to lean.

They’re still data processors, not data creators. They still can’t make something out of nothing. They can’t create wisdom from a pile of facts. And if the “facts” they’re working with are not true, as Biden pointed out, the results will not be true either.

https://www.youtube.com/watch?v=15RjcRJ3Z70

GIGO (Garbage In — Garbage Out)

Artificial Intelligence is a powerful new tool that is becoming a part of our lives.

We do not have access to it’s source code or it’s training data.

I have found that when it exibits bias, I can shift it’s response by citing facts. I think we should engage in training it with our data based use.

HLB

Garbage in, garbage out. The people who “program” AI as well as those who are most likely to use it are all on the liberal side of the equation. Would you expect different results from the same people in charge?